Deep Neural Pilot on Skydio 2

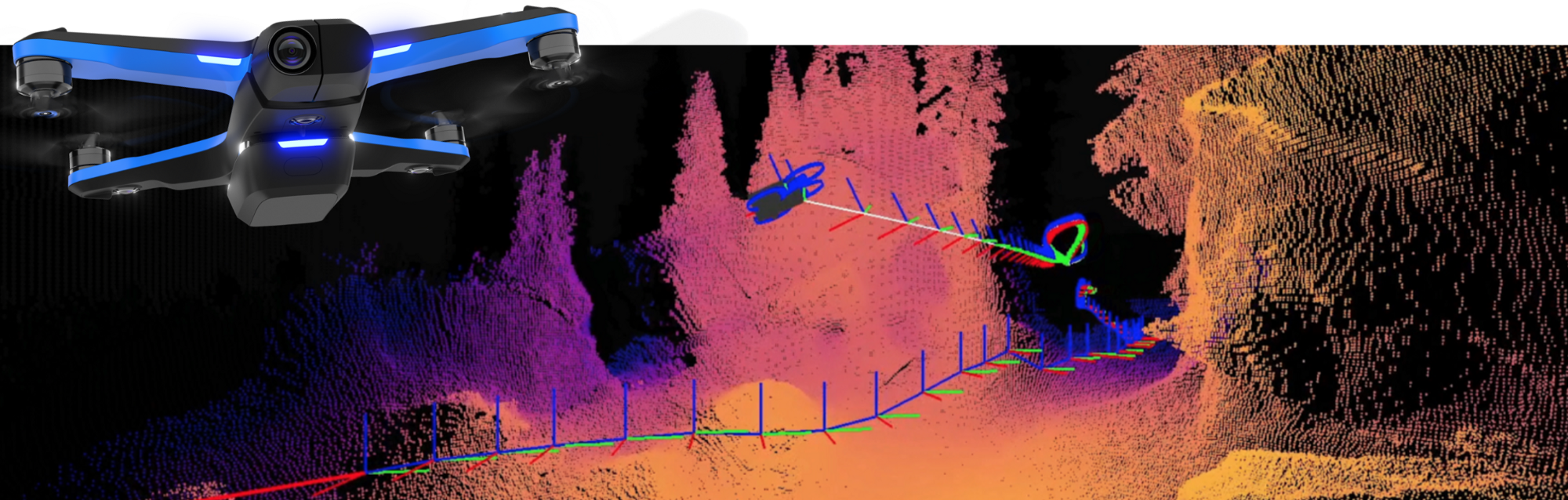

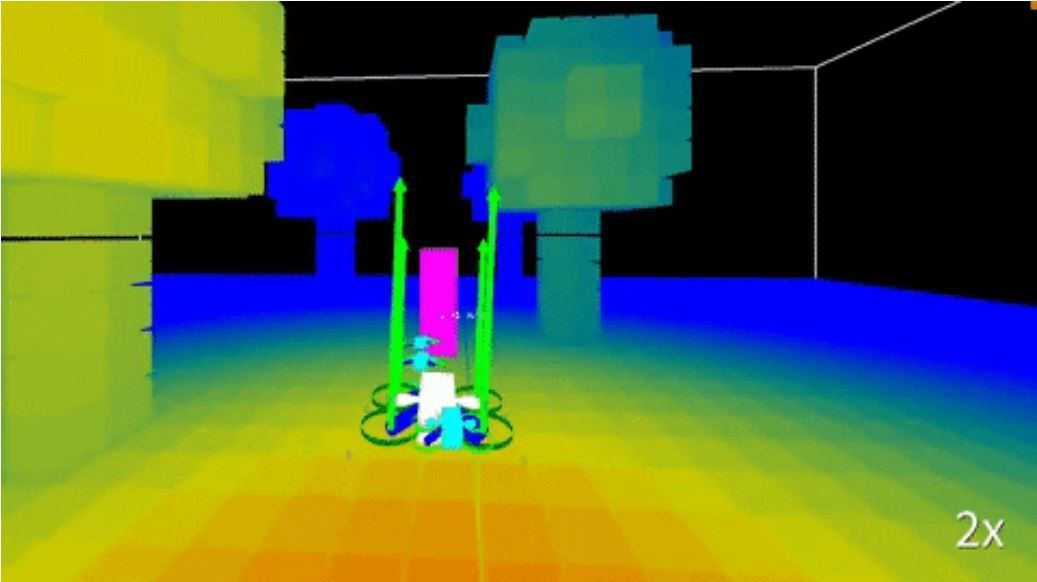

We here at Skydio have been developing and deploying machine learning systems for years due to their ability to scale and improve with data. However, to date our learning systems have only been used for interpreting information about the world; in this post, we present our first machine learning system for actually acting in the world. Using a novel learning algorithm, the Skydio autonomy engine, and only 3 hours of “off-policy” logged data, we trained a deep neural network pilot that is capable of filming while avoiding obstacles.

We approached the problem of training a deep neural network pilot through the lens of imitation learning, in which the goal is to train a model that imitates an expert. Imitation learning was an appealing approach for us because we have a huge trove of flight data with an excellent drone pilot — the motion planner inside the Skydio autonomy engine. However, we quickly found that standard imitation learning performed poorly when applied to our challenging, real-world problem domain.

Standard imitation learning worked fine in easy scenarios, but did not generalize well to difficult ones. We propose that the signal of the expert’s trajectory is not rich enough to learn efficiently. Especially within our domain of flying through the air, the exact choice of flight path is a weak signal because there can be many obstacle-free paths that lead to cinematic video. The average scenario overwhelms the training signal.

How can we do better? Our insight is that we don’t have just any expert, we have a computational expert: the Skydio Autonomy Engine. Therefore instead of imitating what the expert does, we understand what the expert cares about. We call this approach Computational Expert Imitation Learning, or CEILing.

Why is CEILing better than standard imitation learning? Let’s consider a didactic example in which a teacher is trying to teach a student how to do multiplication. The teacher is deciding between two possible lesson plans. The first lesson plan is to give the student a bunch of multiplication problems, along with the answer key, and leave the student alone to let them figure out how multiplication works. The second lesson plan is to let the student attempt to solve some multiplication problems, give the student feedback on the exact mistakes they made, and continue until the student has mastered the topic.

Which lesson plan should the teacher choose? The second lesson plan is likely to be more effective because the student not only learns the correct answer, but also learns why the answer is correct. This allows the student to be able to solve multiplication problems they have never encountered before.

This same insight applies to robot navigation: some deviations from the expert should be penalized more heavily than others. For example, deviating from the expert is generally OK in open space, but a critical mistake if it is towards an obstacle or causes visual loss of the subject. CEILing lets us convey that information from the expert instead of blindly penalizing deviations from the expert’s trajectory. This is why CEILing trains a deep neural pilot that generalizes well with little data. In addition, the expert can access acausal (future) data, such as where the subject it was tracking actually went, which provides a path for the learned system to be able to outperform the expert that trains it.

Although there is still much work to be done before the learned system will outperform our production system, we believe in pursuing leapfrog technologies. Deep reinforcement learning techniques promise to let us improve our entire system in a data-driven way, which will lead to an even smarter autonomous flying camera.

Gregory Kahn is a Ph.D. student at UC Berkeley advised by Pieter Abbeel and Sergey Levine, and was a research intern at Skydio Autonomy in Spring 2019. Special thanks to Somil Bansal for his related research internship in 2018. He is a final year Ph.D. student at UC Berkeley, advised by Claire Tomlin.